Aim 🥅

Explore MCP, see how I can run it on Arch

TL;DR 🩳

- MCP: Model Context Protocol, a protocol used to add in tools to llm to give LLMs a standardized access to real world.

- MCP Server: Server has defined tools (more simply functions) that we make for LLMs, giving them context of real world. It can be a live cricket score data feed or anything, just anything.

- MCP Client: A piece of code that uses the protocol to talk to server, which really grants the LLM access to tools.

- I can say that LLM is the boss man, MCP client is his secretary who updates him about everything happening around, and this secretary then goes to the MCP server which is like the manager, who then gets some data from employees or instruct them to do something.

- I can add my custom MCP server to claude code using:

claude mcp add weather-server -- uv run --directory /location_to_repo/PythonProjects/ai/mcp_101/weather-server python weather.py

What's on the plate 🍽?

- MCP: A protocol, standardizes how to provide context to LLMs. Like a USB-C port!

- To interact with data & tools.

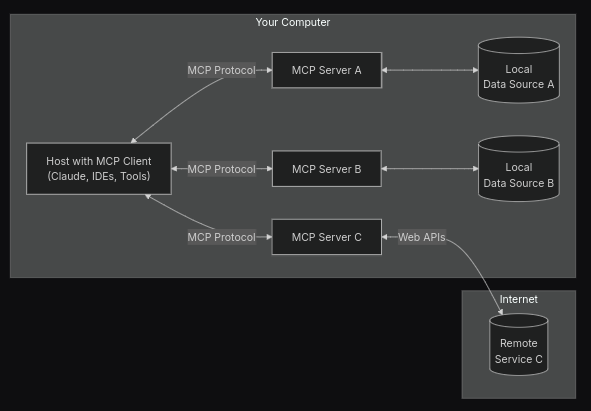

- Follows a Client-Server architecture

- MCP Hosts: Claude Desktop, IDEs, AI tools that want to access data via MCP

- MCP Servers: Programs exposing access through MCP, like PostCrawl MCP, Wikipedia MCP etc..

- MCP Clients: Clients to keep 1:1: connection to MCP Servers

- Local Data Sources: files/services/db that MCP servers access

- Remote Services: anything else that MCP servers access mostly via APIs, like Stocks API, Weather API etc..

- MCP Server:

- Servers can connect to any client, be it Claude Desktop or any other AI tool or even another exposed client, which we shall use soon...

- Servers provide:

- Resources: like API responses (from db or a service), file content

- Tools: functions that can be called by LLM, like

additiontool - Prompts: pre written templates

- We can make a simple server following the [official docs]https://modelcontextprotocol.io/quickstart/server#why-claude-for-desktop-and-not-claude-ai, but here is a quick breakdown of that:

- we initialize the mcp the way we do a simple FastAPI app using FastMCP:

from cmcp.server.fastmcp import FastMCP

mcp = FastMCP("weather")

- We can then define our internal functions, basic stuff. So let's focus on next part, adding the

toolto our MCP server, just how we define a route on FastAPI, very similar:

@mcp.tool()

async def get_alerts(state: str) -> str:

"""Get weather alerts for a US state.

Args:

state: Two-letter US state code (e.g. CA, NY)

"""

url = f"{NWS_API_BASE}/alerts/active/area/{state}"

data = await make_nws_request(url)

if not data or "features" not in data:

return "Unable to fetch alerts or no alerts found."

if not data["features"]:

return "No active alerts for this state."

alerts = [format_alert(feature) for feature in data["features"]]

return "\n---\n".join(alerts)

- Then just run it:

if __name__ == "__main__":

# Initialize and run the server

mcp.run(transport='stdio')

- MCP Client

- Clients is something that can talk to the MCP server, accessing the tools there. It can be Claude Desktop app, or Cursor or MCP Inspector or just a simple Python script. Let's make one referring to the official MCP client docs

- A client should have a

mcp. ClientSessionattribute, an llm attribute likeanthropicoropenaior anything else. and an "exit stack"contextlib.AsyncExitStack:

- A client should have a

- Clients is something that can talk to the MCP server, accessing the tools there. It can be Claude Desktop app, or Cursor or MCP Inspector or just a simple Python script. Let's make one referring to the official MCP client docs

import asyncio

from typing import Optional

from contextlib import AsyncExitStack

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

from anthropic import Anthropic

from dotenv import load_dotenv

load_dotenv() # load environment variables from .env

class MCPClient:

def __init__(self):

# Initialize session and client objects

self.session: Optional[ClientSession] = None

self.exit_stack = AsyncExitStack()

self.anthropic = Anthropic()

# methods will go here

- Now we want to make a method to connect to the server. Simple again, it expects the path to our MCP server code (.py or .js file), it then uses

mcp.StdioServerParametersto set up command and args, then it just enters the async context using thecontextlib.AsyncExitStackand initializes thesession, this session is what we wrote in the MCP server code, we can see all the tools which are defined by the server there. See more of this at contextlib AsyncExitStack A context manager that is designed to make it easy to programmatically combine other context managers and cleanup functions, especially those that are optional or otherwise driven by input data. Just asynchronously:

async def connect_to_server(self, server_script_path: str):

"""Connect to an MCP server

Args:

server_script_path: Path to the server script (.py or .js)

"""

is_python = server_script_path.endswith('.py')

is_js = server_script_path.endswith('.js')

if not (is_python or is_js):

raise ValueError("Server script must be a .py or .js file")

command = "python" if is_python else "node"

server_params = StdioServerParameters(

command=command,

args=[server_script_path],

env=None

)

stdio_transport = await self.exit_stack.enter_async_context(stdio_client(server_params))

self.stdio, self.write = stdio_transport

self.session = await self.exit_stack.enter_async_context(ClientSession(self.stdio, self.write))

await self.session.initialize()

# List available tools

response = await self.session.list_tools()

tools = response.tools

print("\nConnected to server with tools:", [tool.name for tool in tools])

- Now we have a basic client which can talk to the server, so let's add in a method to handle the user's input, i have comments at each crucial part of code here.

async def process_query(self, query: str) -> str: # just take in the user's query as a string

"""Process a query using OpenAI and available tools"""

messages = [

{

"role": "user",

"content": query

}

]

# let's throw in all the available tools with their description & schema to our llm so it can use it.

response = await self.session.list_tools()

available_tools = [{

"type": "function",

"function": {

"name": tool.name,

"description": tool.description,

"parameters": tool.inputSchema

}

} for tool in response.tools]

# Initial OpenAI API call

# and there you go, simple as that, we just throw in the tools which we unwrapped using the Model Context Protocol

response = self.openai.chat.completions.create(

model="gpt-4o-mini",

max_tokens=1000,

messages=messages,

tools=available_tools

)

# Process response and handle tool calls, quite basic, just parse the stuff coming out of llm

final_text = []

assistant_message = response.choices[0].message

if assistant_message.content:

final_text.append(assistant_message.content)

# Handle tool calls

# yeah if the llm asks for a tool call, then we just update that in the chat like this

if assistant_message.tool_calls:

messages.append({

"role": "assistant",

"content": assistant_message.content,

"tool_calls": assistant_message.tool_calls

})

for tool_call in assistant_message.tool_calls:

tool_name = tool_call.function.name

tool_args = eval(tool_call.function.arguments) # Parse JSON string to dict

# Execute tool call

# here is the main deal, we are sending in all the params to our tool to process it

result = await self.session.call_tool(tool_name, tool_args)

final_text.append(f"[Calling tool {tool_name} with args {tool_args}]")

# post all that, we are just appending the response from the tool call, I think in role we should keep it as tool.tool_name for better context for our LLM but openai has this value fixed to a literal "tool" when the message is from a tool. Bad OpenAI

# Add tool result to messages

messages.append({

"role": "tool",

"tool_call_id": tool_call.id,

"content": str(result.content)

})

# Get next response from OpenAI

# now we have the tool calls made and we just throw that into our llm, the request made through tool and it's response

response = self.openai.chat.completions.create(

model="gpt-4o-mini",

max_tokens=1000,

messages=messages,

)

# voila, we just made a call to a tool using MCP

if response.choices[0].message.content:

final_text.append(response.choices[0].message.content)

return "\n".join(final_text)

Now the official docs mention of another loop for chat but that's just for UX, for understanding how to use MCP, this is more than enough. We created a server with some tools and a client which uses OpenAI. You can find the code here: https://github.com/ps428/PythonProjects/tree/master/ai/mcp_101

References 📘

- http://modelcontextprotocol.io/docs